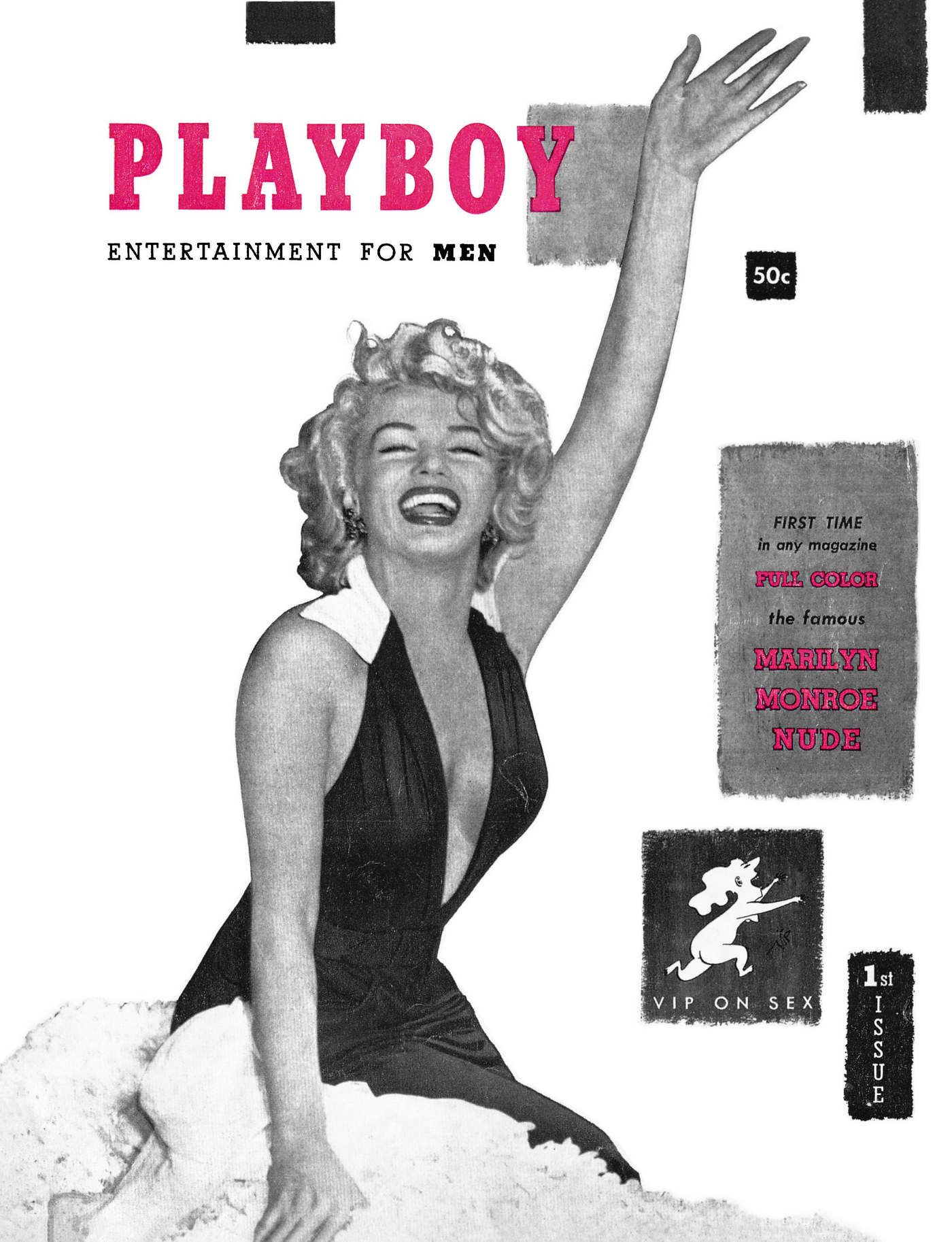

Playboy Magazine

Read it for the articles—or don’t! Unlock unlimited access to the complete PLAYBOY magazine archive. Every Playboy cover. Every Playboy interview. Every Playboy Playmate. Since 1953.

Explore Every Issue

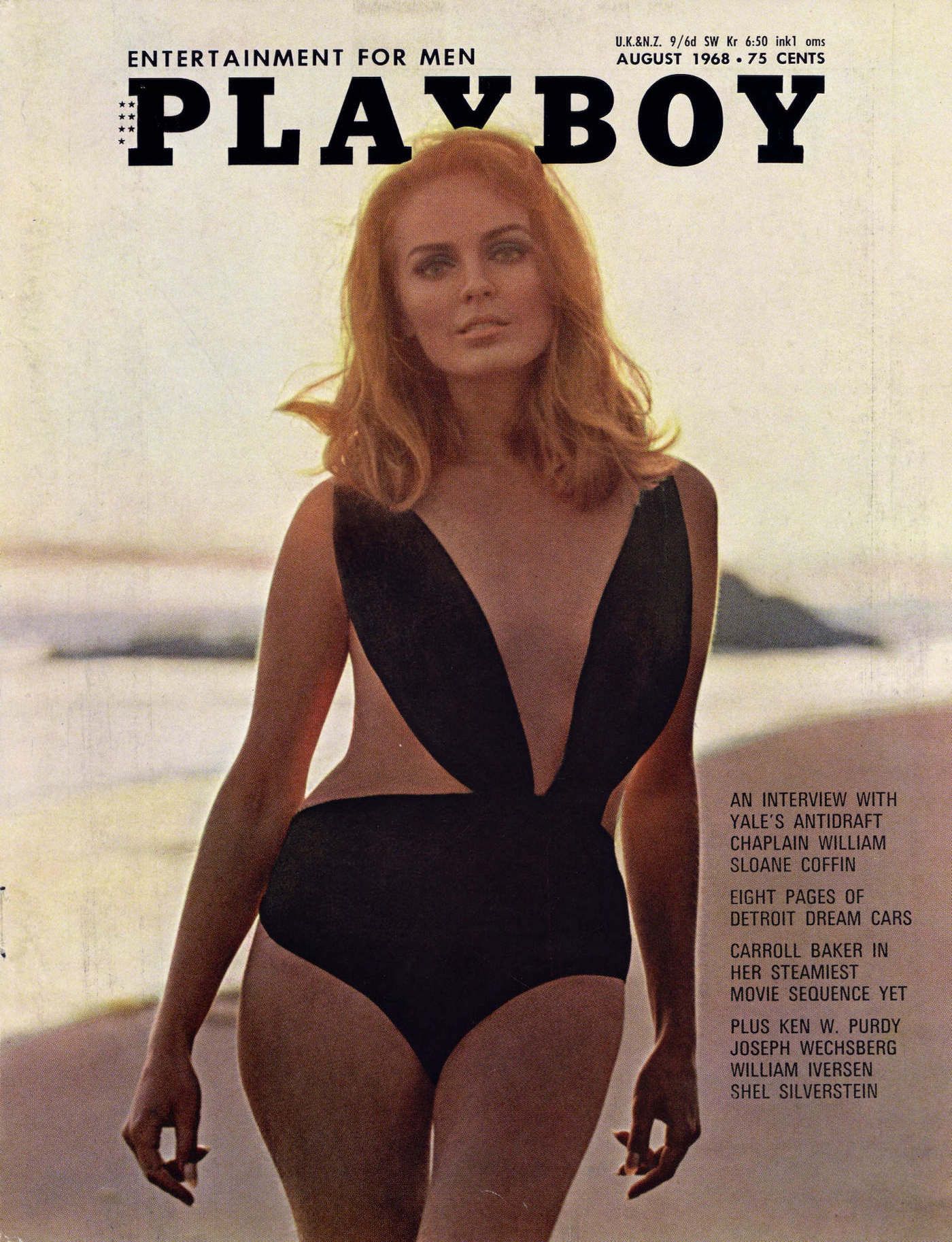

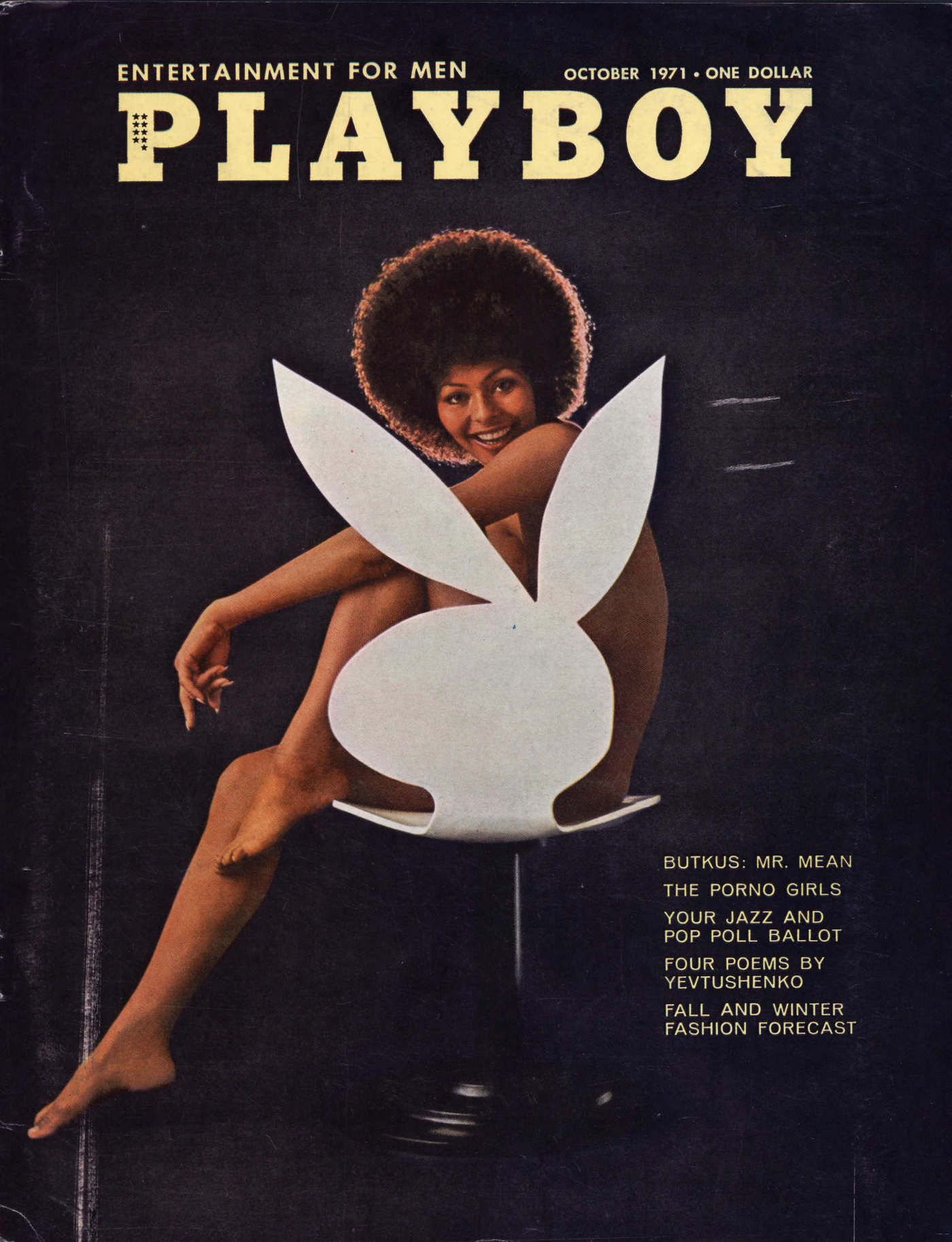

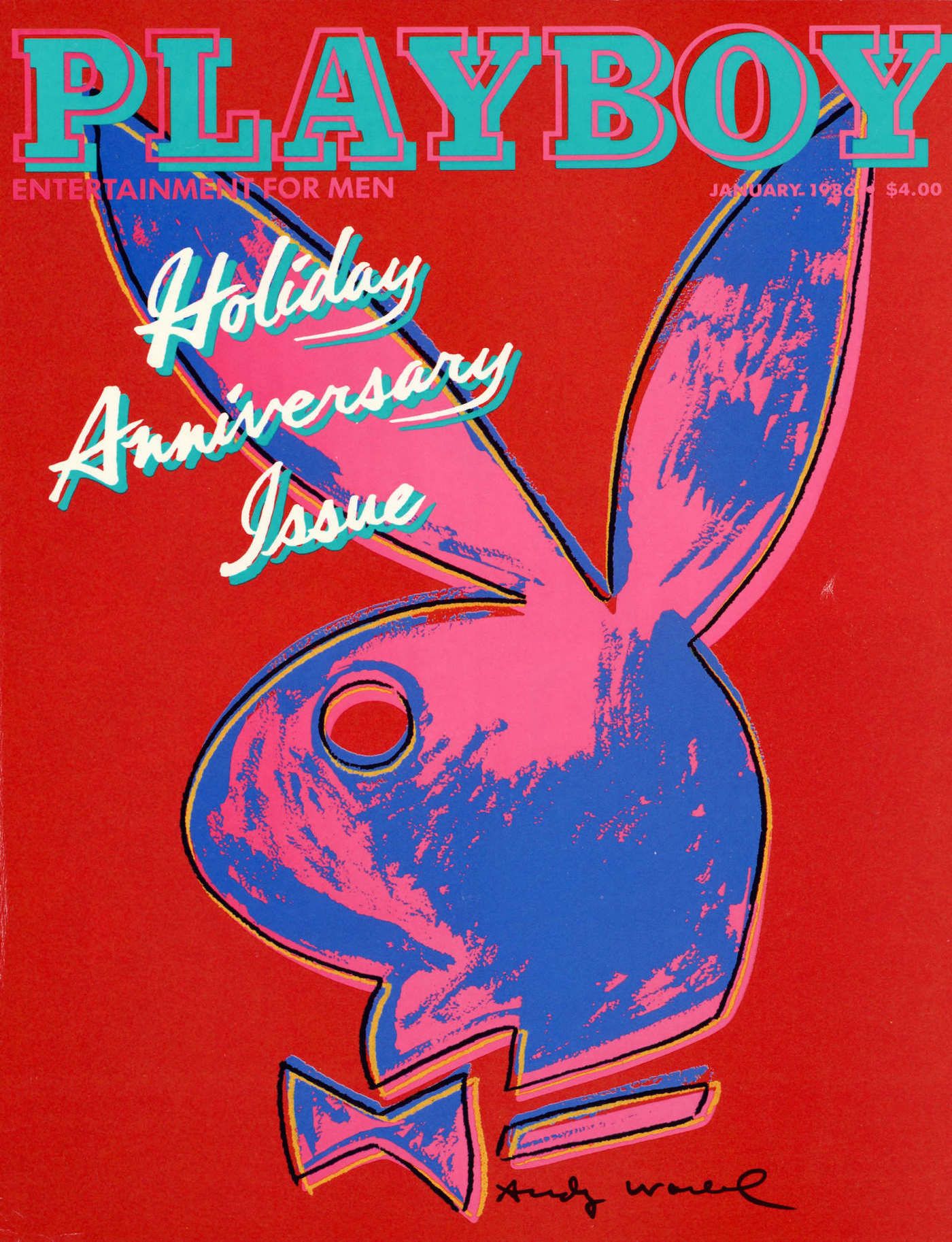

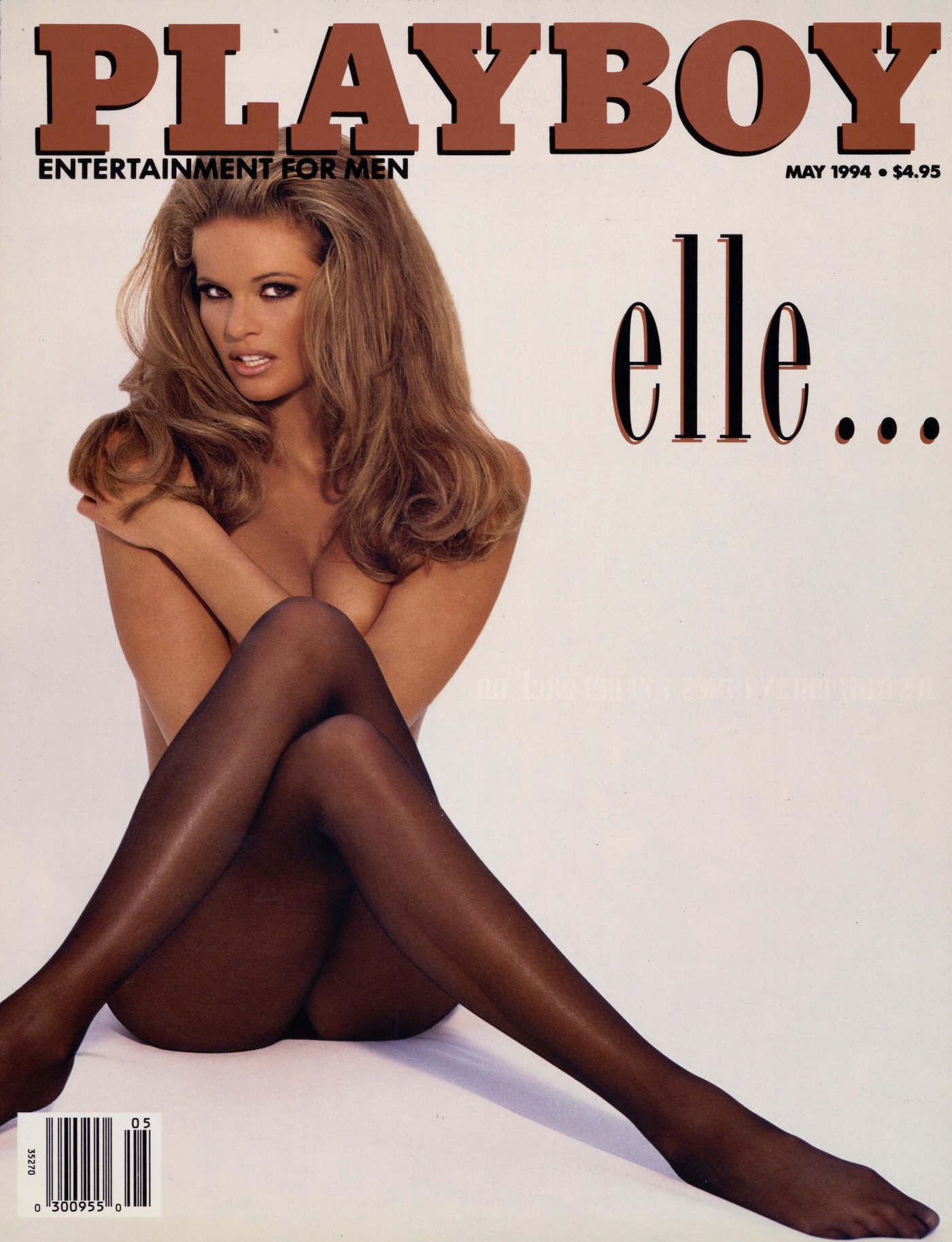

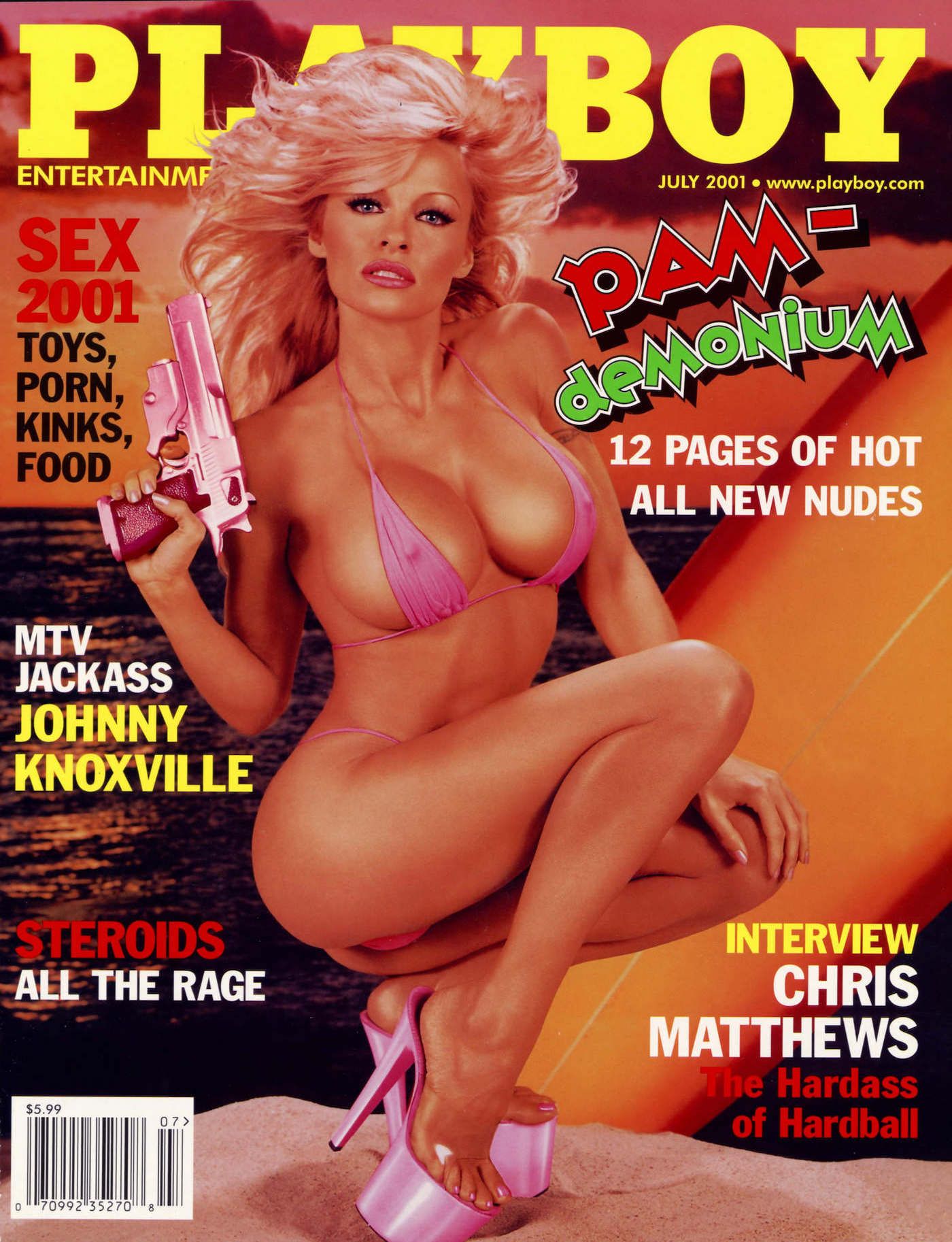

FEATURED ISSUES

A snapshot of some of our most iconic issues–and cover stars.

December 1998

Inside the Playboy Mansion

In a deliciously escapist photo essay, famed journalist Bill Zehme guides PLAYBOY readers on an exclusive tour of the Playboy Mansion. Among his discoveries: pajama parties, peacocks (yes, the Mansion was home to a private zoo) and many a Playmate. Part home, part office and part year-round party venue, the fabled landmark comes to life for readers through behind-the-scenes photos, private scrapbooks and anecdotes from Mansion regulars.

Hop Into Our Heritage

70 years of pleasure and progress, on and off the pages of the magazine.

May 1966

Playmate First Class: Jo Collins in Vietnam

In 1966, Playboy’s December 1964 Playmate and 1965 Playmate of the Year, aptly nicknamed G.I. Jo, delivered a lifetime subscription of PLAYBOY magazine to the front lines in South Vietnam. Thanks to special government clearance, Collins delivered the magazine, and a much needed morale boost, to the officers of Company B, who had specifically requested her attendance in a letter to the PLAYBOY Editors.

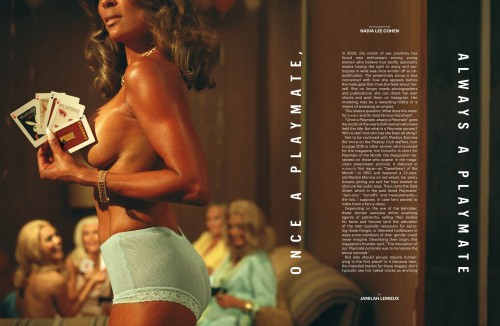

Playmates

Once a Playmate, always a Playmate–get to know our most important brand ambassadors.

Celebrity Pictorials

Iconic beauties who have graced our pages.

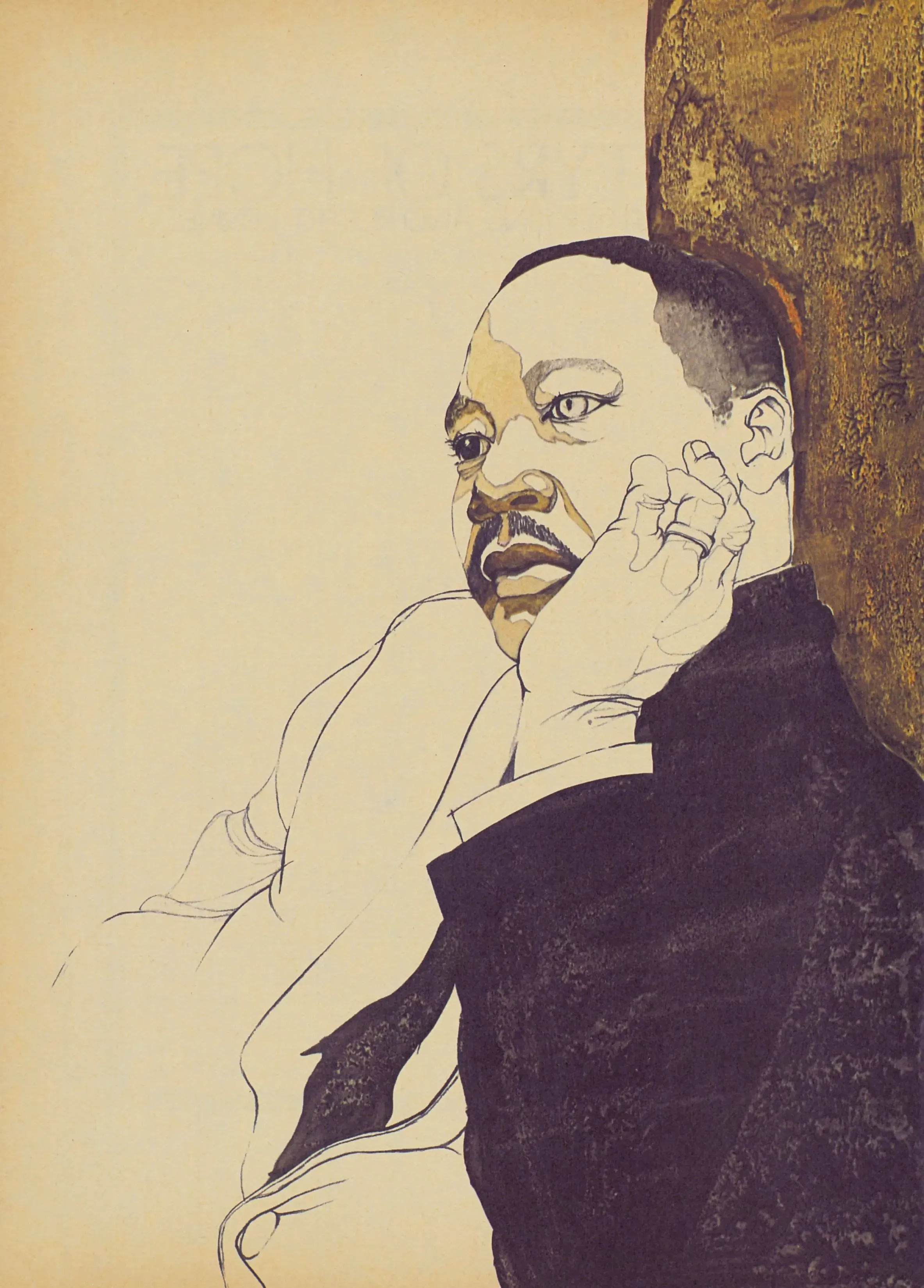

January 1969

A Testament of Hope

In January of 1969 PLAYBOY published a piece that would come to stand the test of time: Martin Luther King, Jr.’s essay A Testament of Hope, the civil rights leader’s final published statement prior to his murder in the spring of 1968. The urgent missive has since become one of the world’s most powerful writings on nonviolence, policy, Black nationalism and humanity itself.

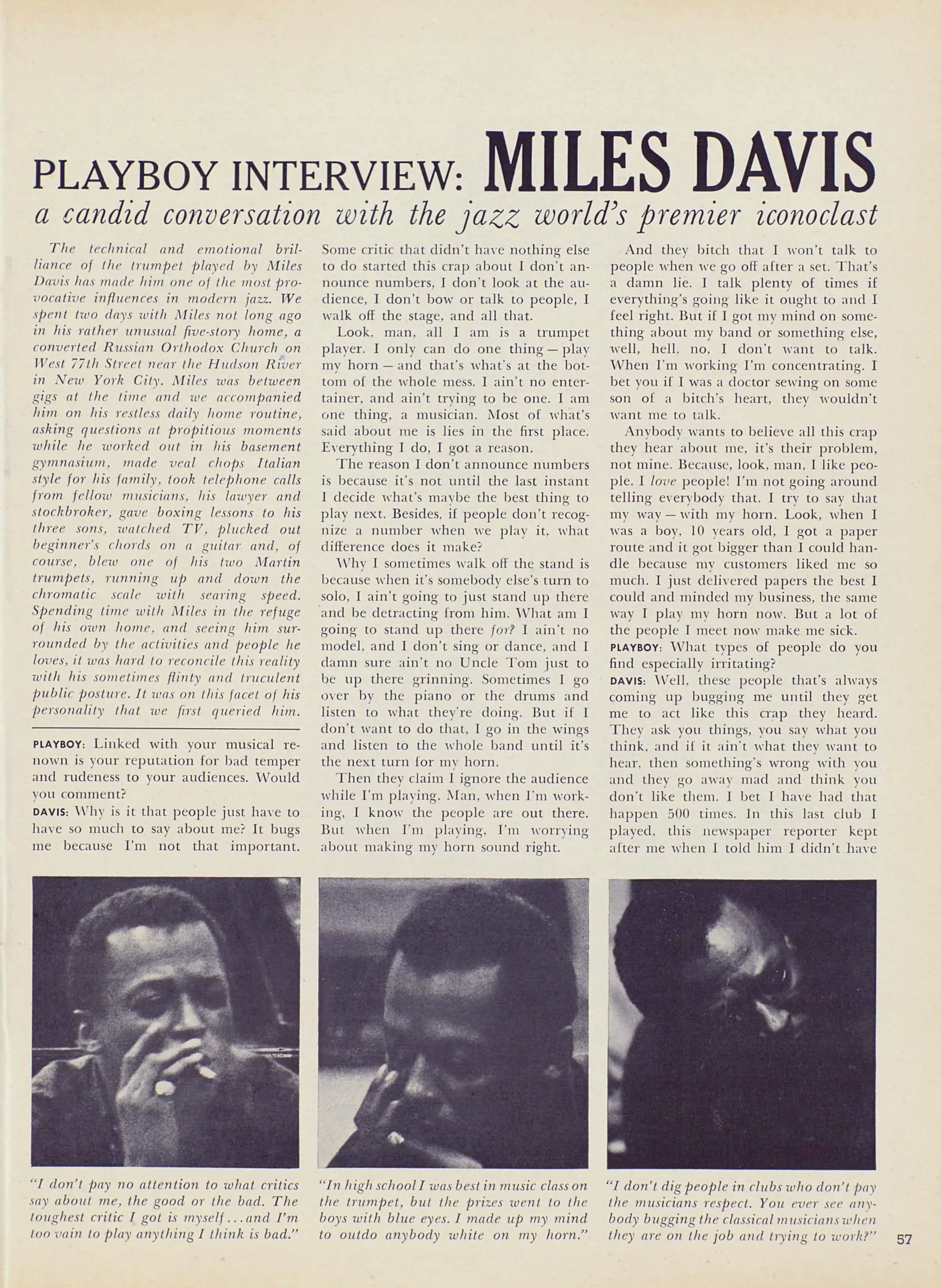

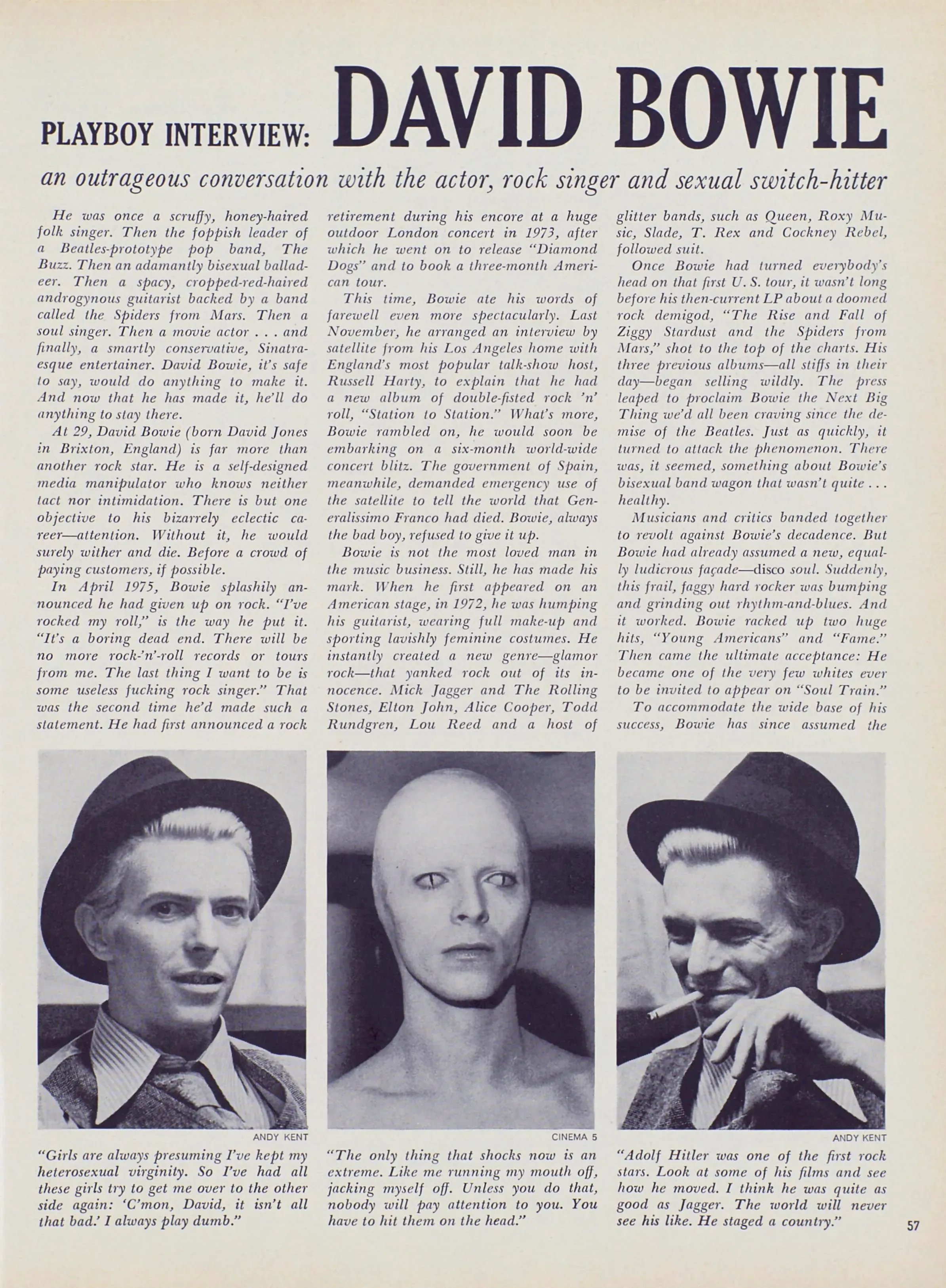

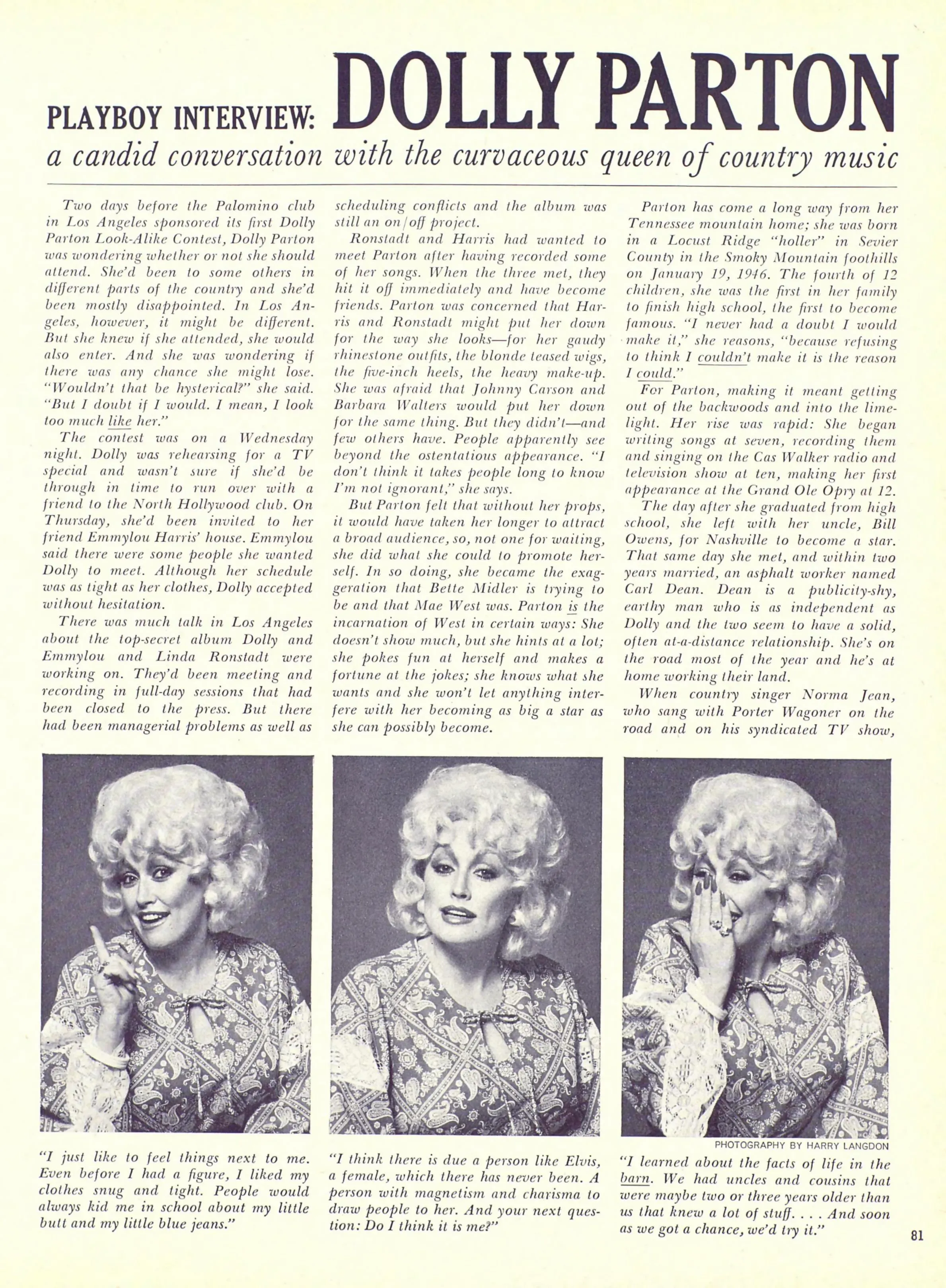

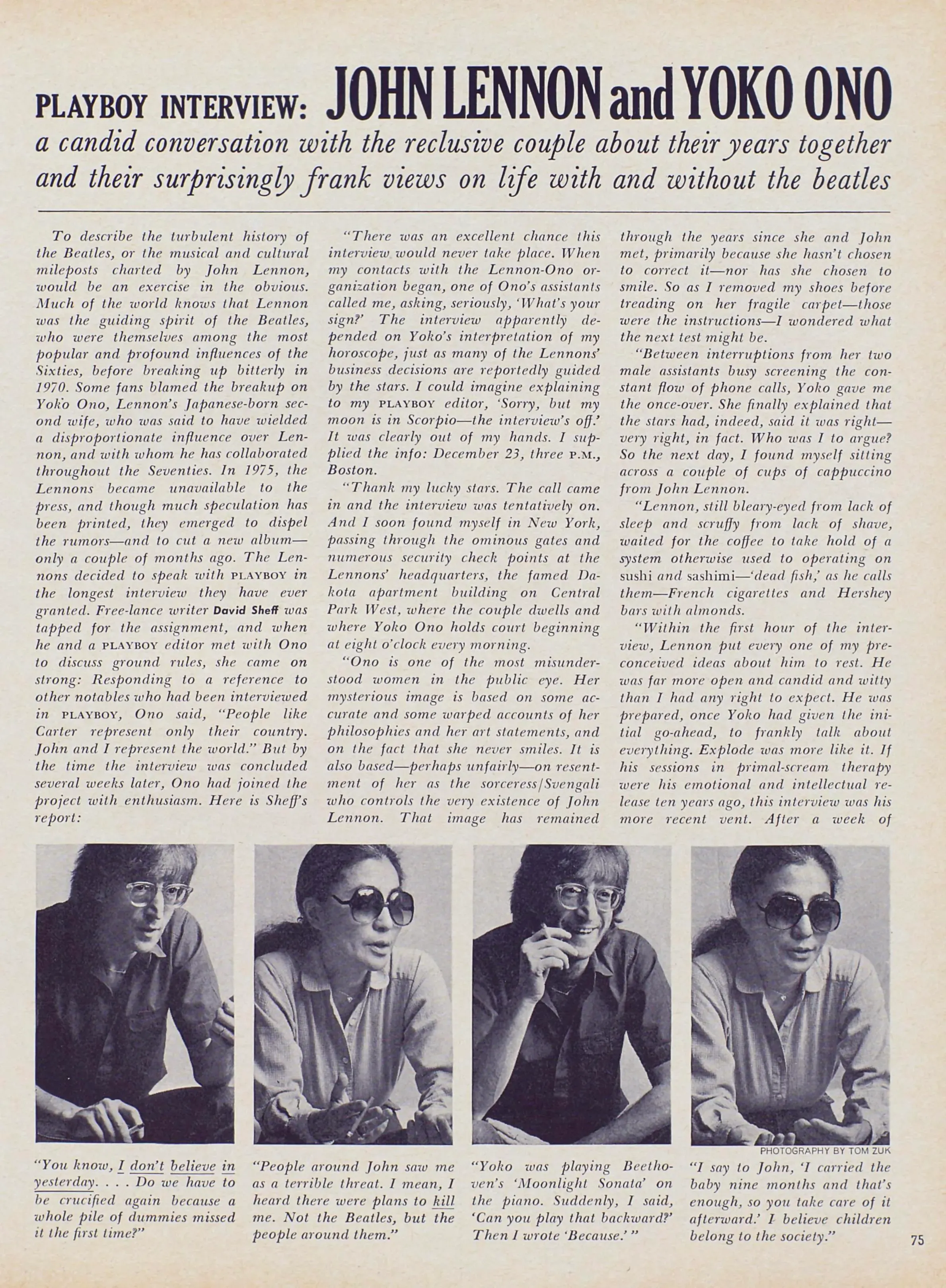

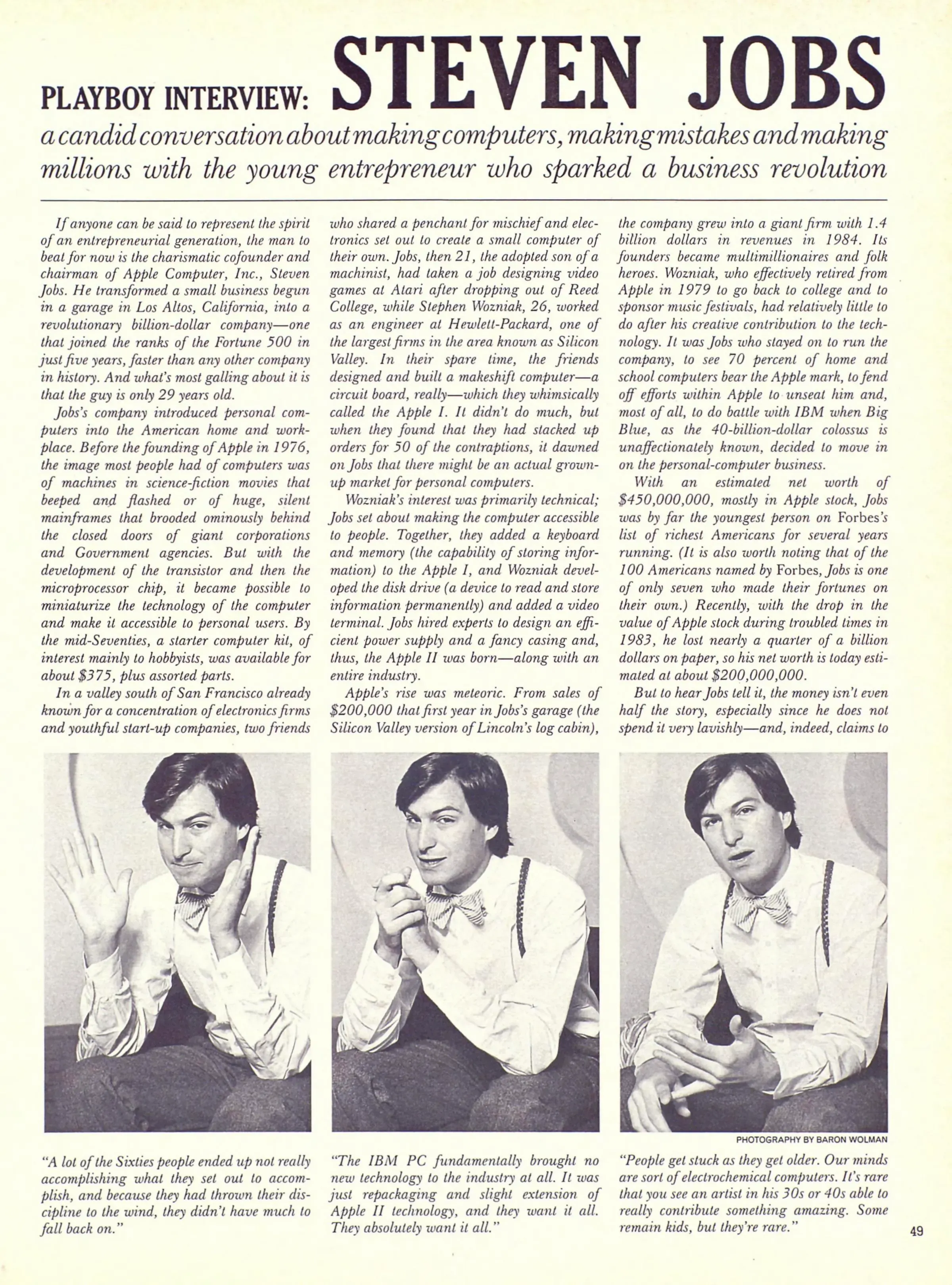

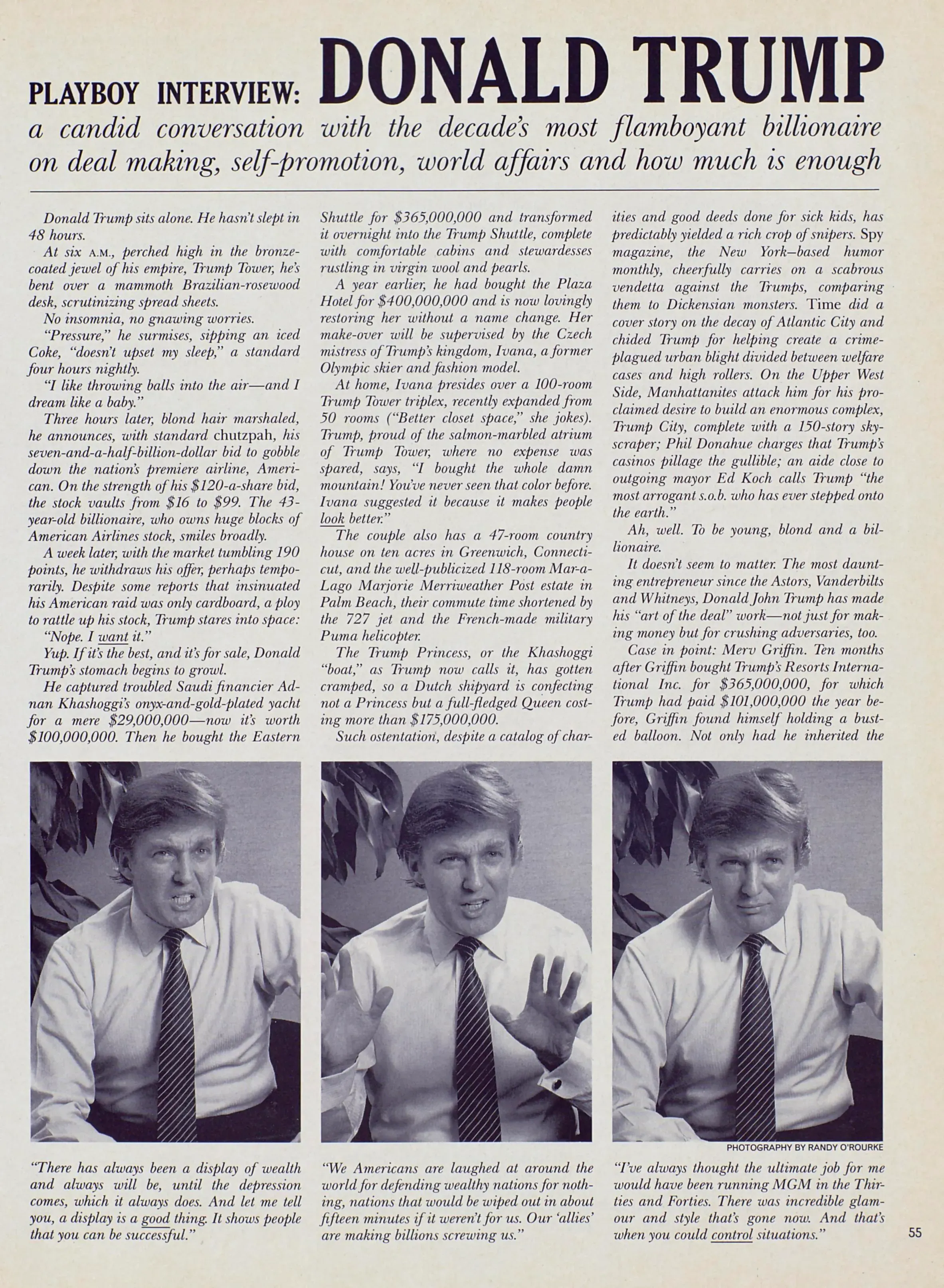

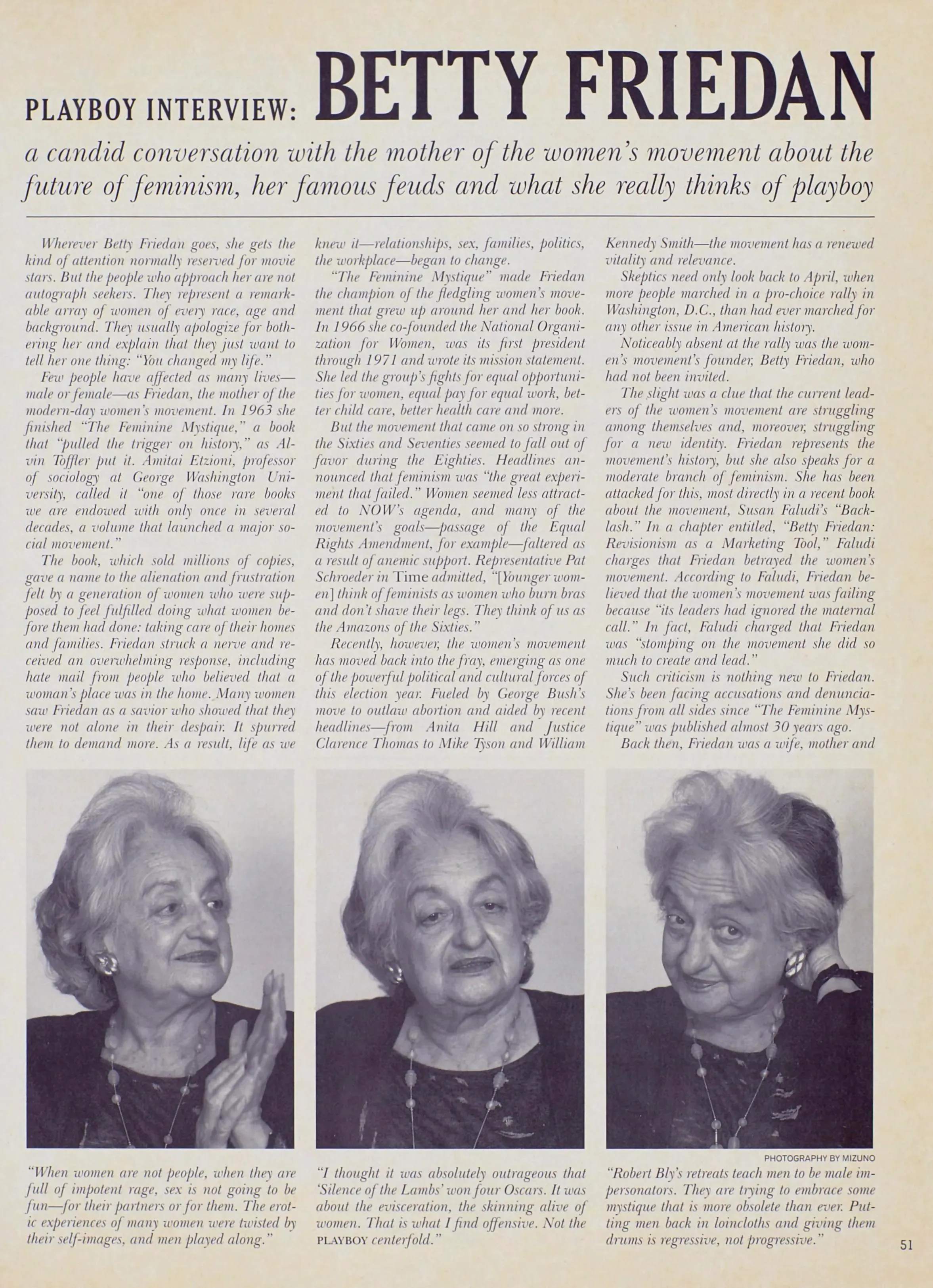

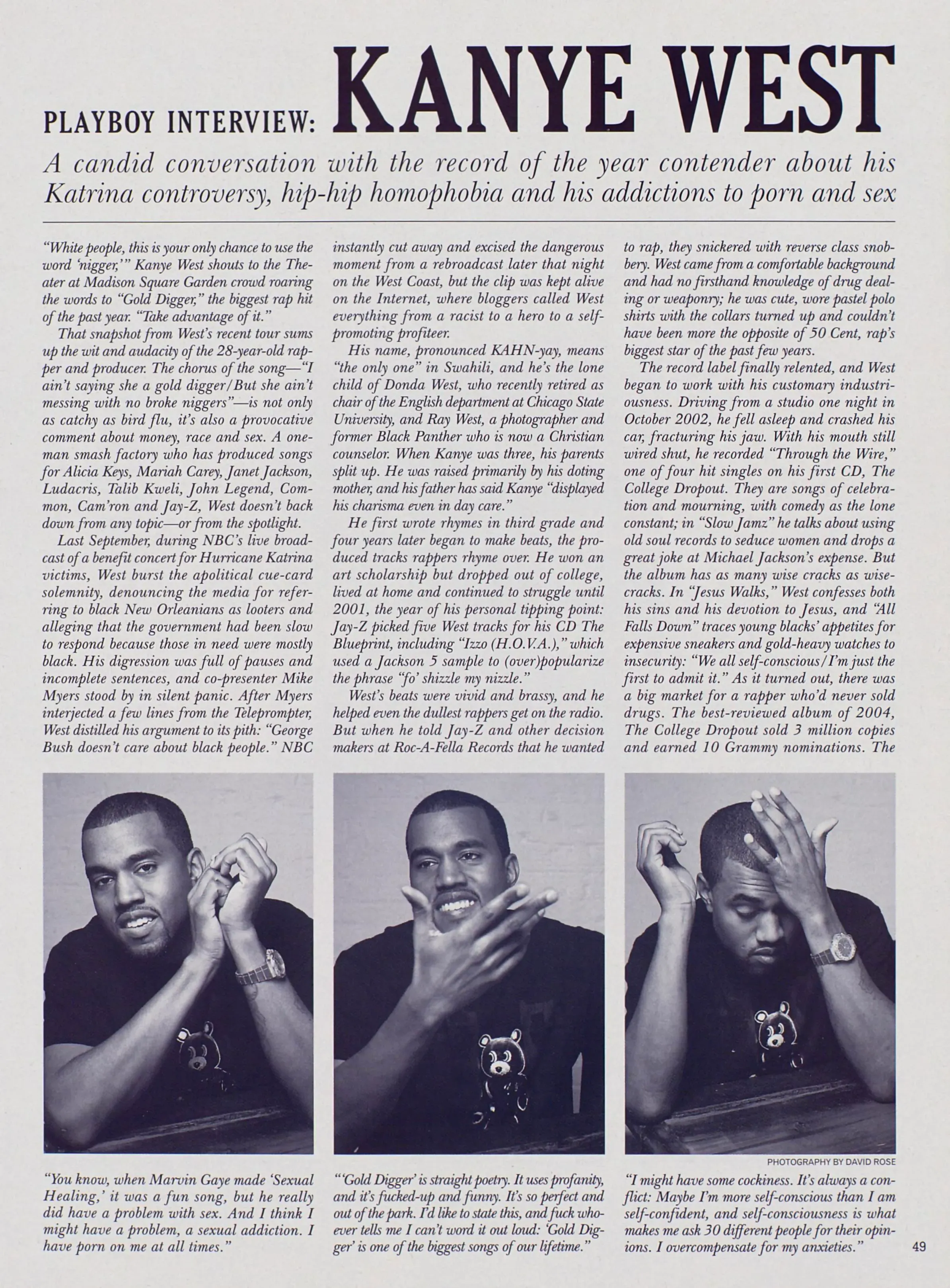

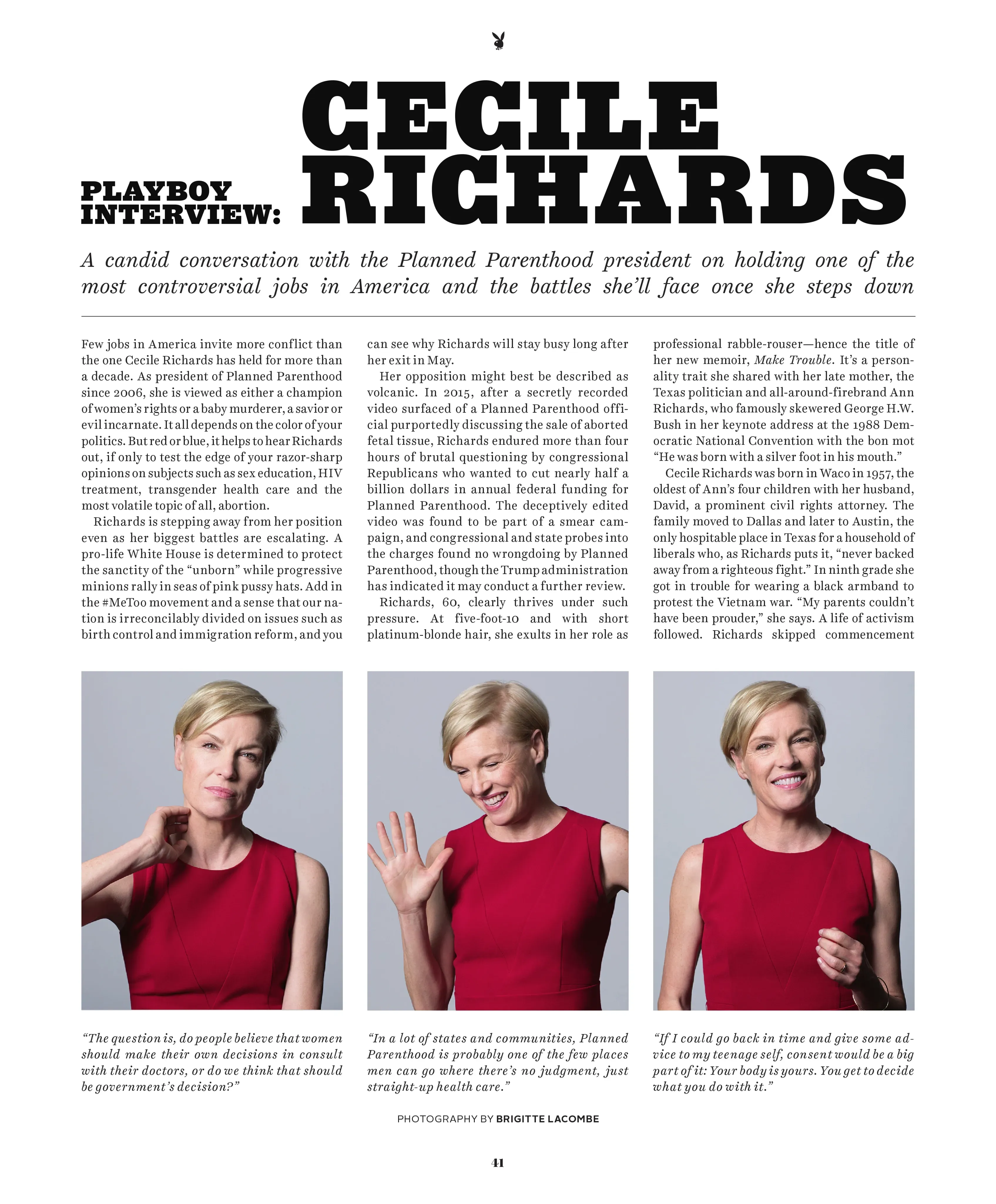

The Playboy Interview

Seven decades of candid conversation with the world’s most legendary cultural figures.

September 1991

The Transformation of Tula

In 1981, on the heels of her appearance in the pages of PLAYBOY to celebrate her role as a Bond girl in For Your Eyes Only, fashion model Caroline “Tula” Cossey was outed as a transgender woman by the tabloids. Nearly a decade later, Tula returned to PLAYBOY to share her story–on her terms–and to pose for another pictorial, marking the first solo pictorial for an openly transgender woman in PLAYBOY.

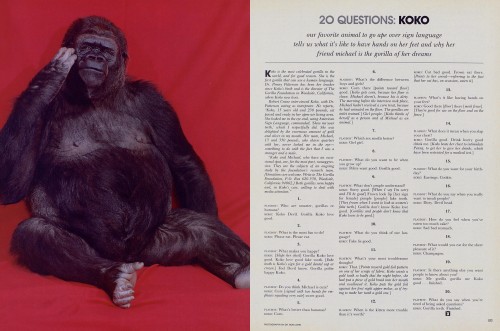

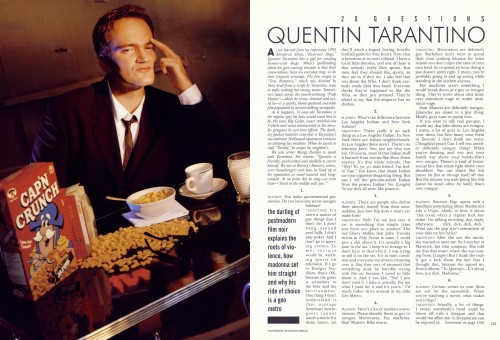

20Q

One personality. 20 provocative questions. Uncensored tête-à-têtes with the tastemakers who are driving pop culture.

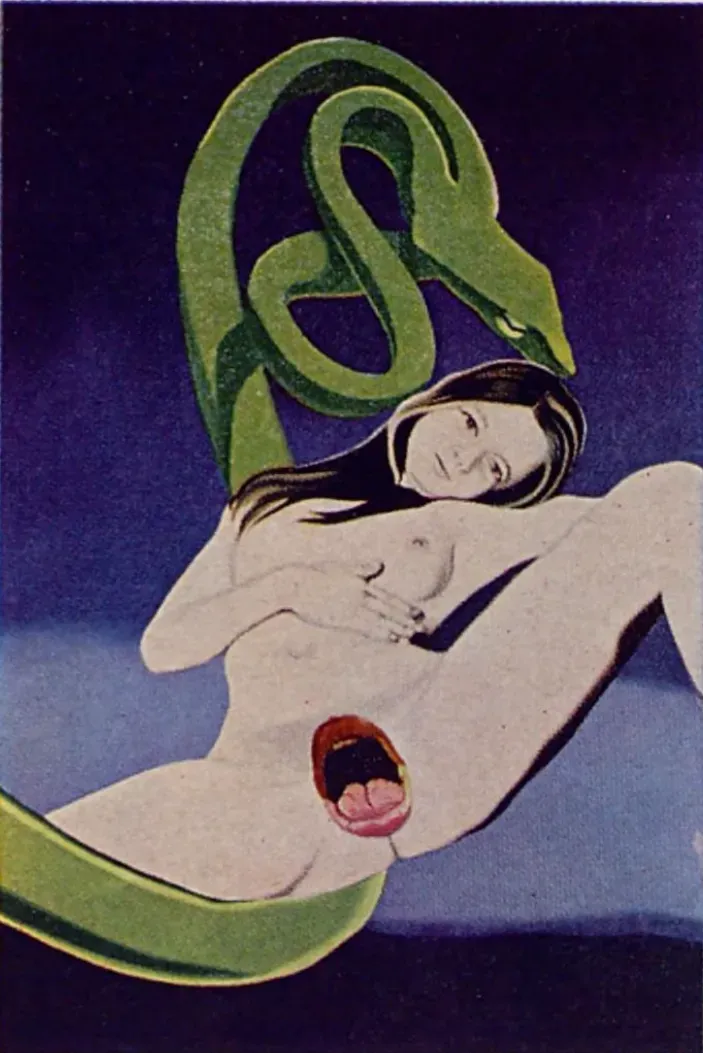

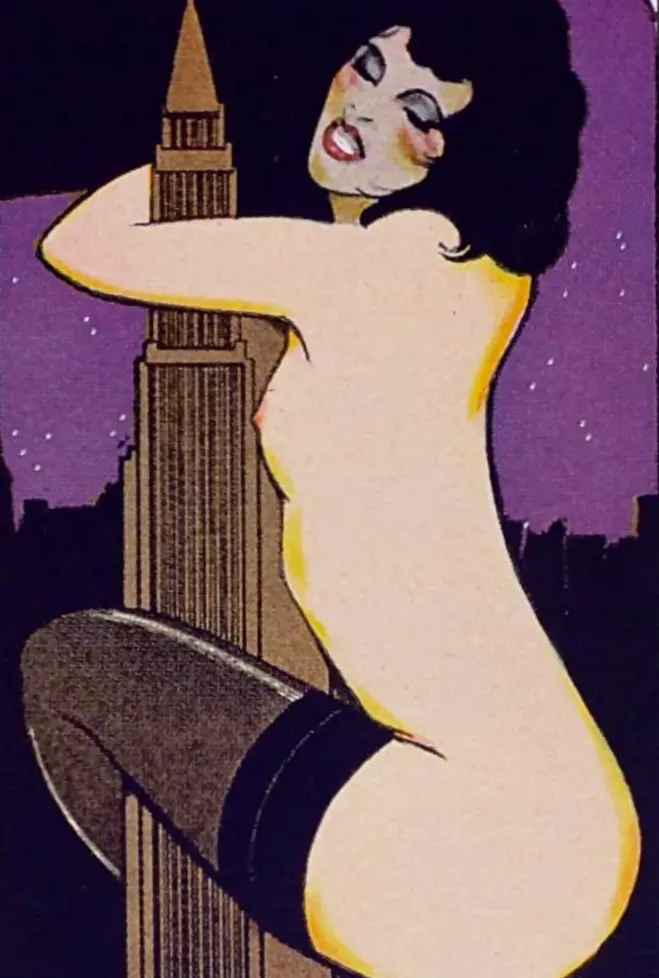

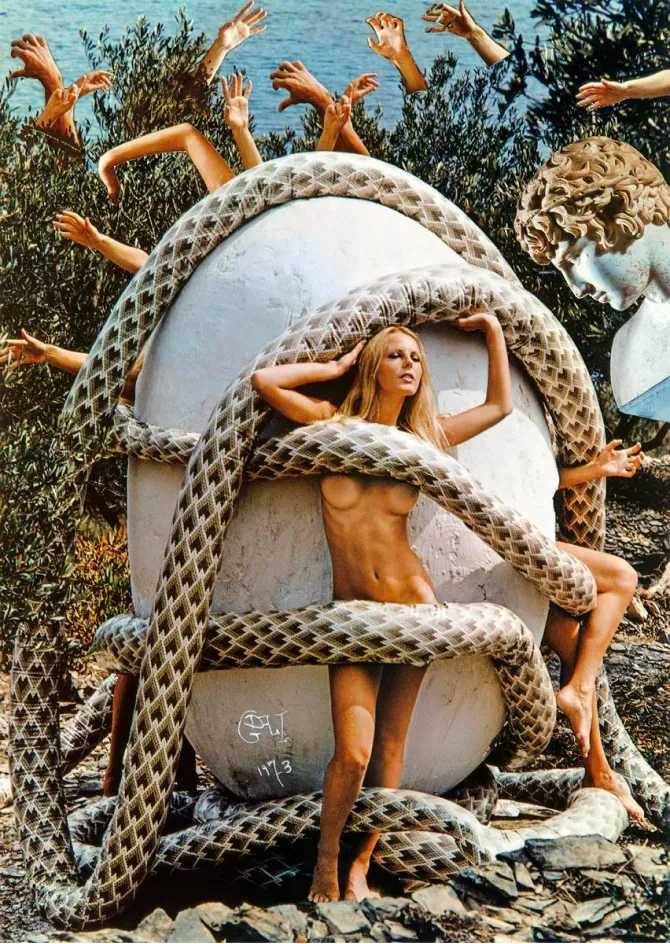

December 1974

The Erotic World of Salvador Dali

In the early 1970s, PLAYBOY asked surrealist genius Salvador Dalí to conceptualize his erotic fantasies, then dispatched staff photographer Pompeo Posar to the small Spanish village of Cadaqués to help the artist realize them. Featuring an unexpected medley of oversized eggs, ancient architecture, and a bevy of nude models, the outrageous mise-en-scène is perhaps one of the strangest, yet most delightful, pictorials in Playboy history.

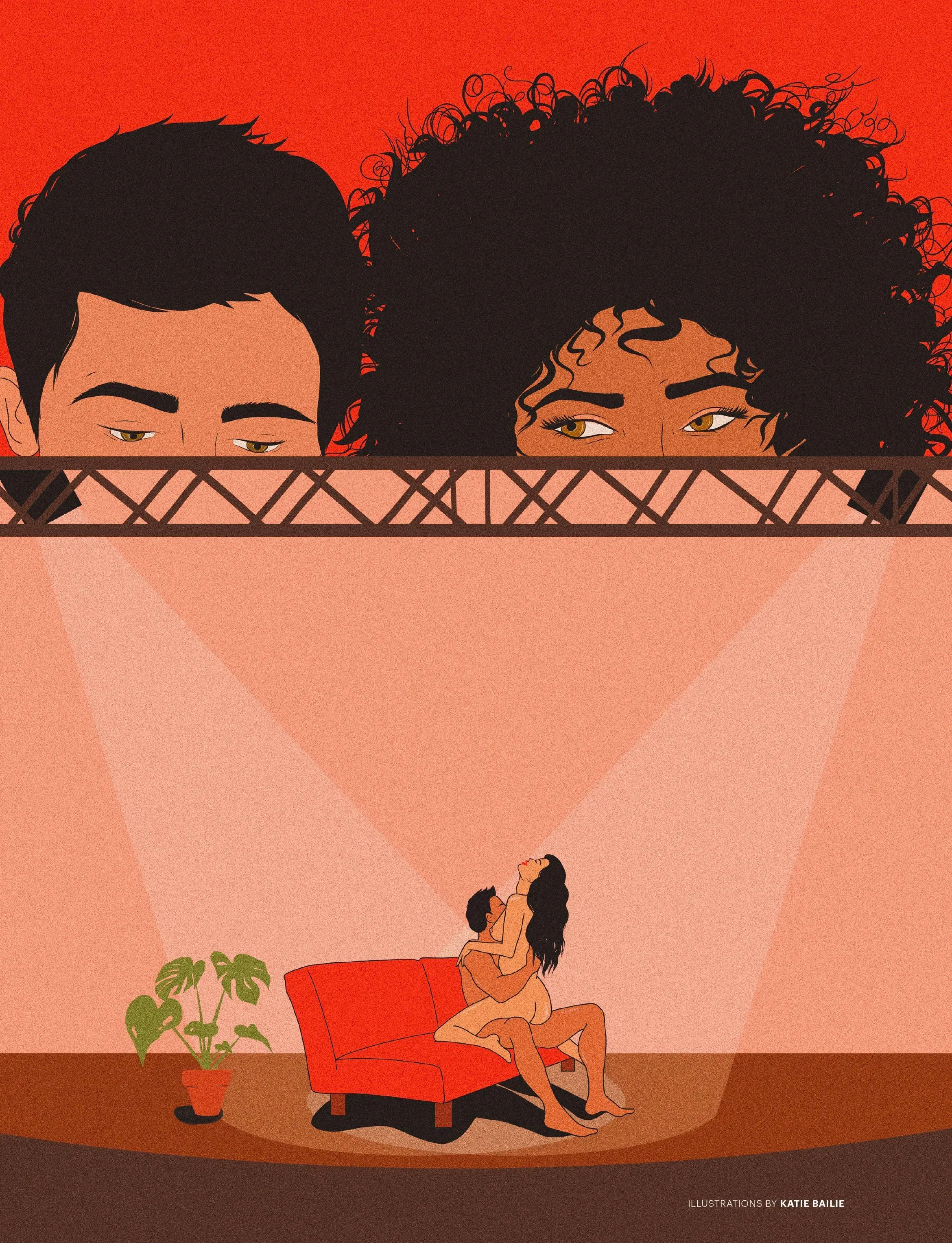

Playboy Advisor

From grope suits to spanking etiquette, our sex-positive advice column has been satisfying curious readers on matters of the heart, the body and the bedroom for generations.

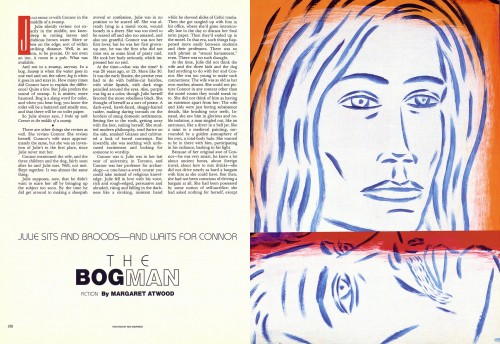

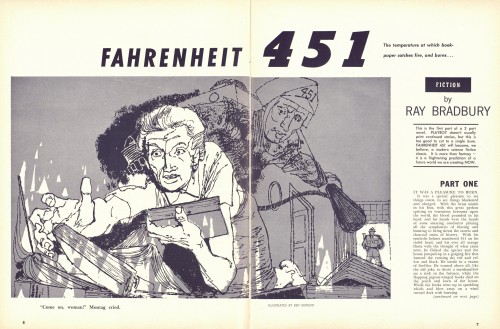

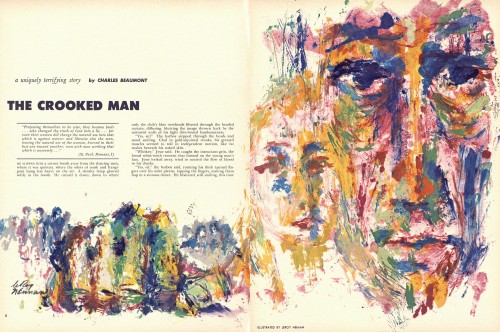

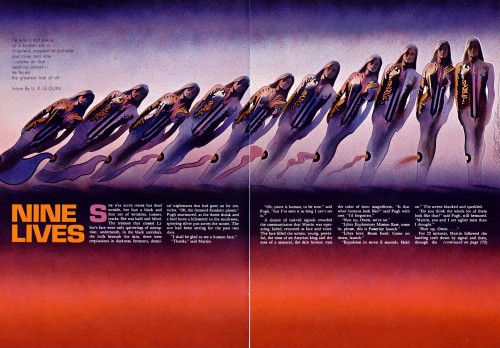

Playboy Fiction

Pages from–and for–the ages. A collection of our favorite literary moments.